I’ve previously written about health checking/monitoring in the blog posts Health Checking / Monitoring Exchange Server 2013/2016 and Lync Server 2013 Health Checking. These posts are very popular, so I decided to write a similar post about Health Checking / Monitoring SharePoint 2016.

This time however, there weren’t that many useful scripts available. Almost all of the scripts I found were too basic, including just a few PowerShell commands. I on the other hand was looking for something similar to the Exchange health report, including a weekly email and so forth. That said, I finally managed to find a a couple of nice sources:

http://abhayajoshi.blogspot.com/2016/05/sharepoint-server-daily-check.html

https://gallery.technet.microsoft.com/SharePoint-Server-Check-V10-f5e205b4

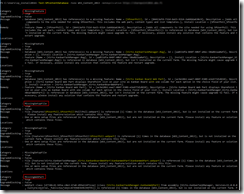

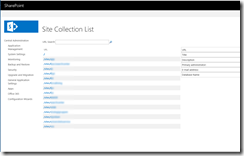

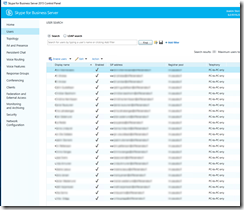

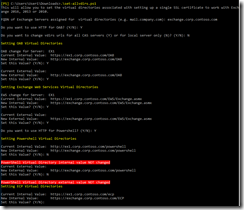

My own script is a modified version of the one found in the first link, with “borrowed” features from the one found in the second link. The end result looks like this, and is just what I was looking for:

(WordPress is not that cooperative with large pictures, use your browsers zoom feature on this embedded picture instead).

You’ll get all the important stuff from the email report – ranging from basic information such as memory and disk usage to more advanced stuff like core SharePoint services and application reports including upgrade needs. I don’t mind sharing either, so here’s my modified PowerShell script (with $servers and email addresses censored):

$head='<style>body{font-family:Calibri;font-size:10pt;}th{background-color:black;color:white;}td{background-color:#19fff0;color:black;}h4{margin-right: 0px; margin-bottom: 0px; margin-left: 0px;}</style>'#Add SharePoint PowerShell Snap-In

Add-PSSnapin Microsoft.SharePoint.PowerShell -erroraction SilentlyContinue#SharePoint Servers, use comma to separate.

$servers = @("xxxxx")#===============#

# Server Report #

#===============##Memory Ustilization

#Modified to display GB instead of byte, source:

#https://www.petri.com/display-memory-usage-powershell#I'm skipping the free percentage stuff //JS

#$Memory = Get-WmiObject -Class Win32_OperatingSystem -ComputerName $servers| Select CsName , FreePhysicalMemory , TotalVisibleMemorySize , Status | ConvertTo-Html -Fragment

$Memory = Get-Ciminstance Win32_OperatingSystem | Select @{Name = "Total(GB)";Expression = {[int]($_.TotalVisibleMemorySize/1mb)}},

@{Name = "Free(GB)";Expression = {[math]::Round($_.FreePhysicalMemory/1mb,2)}} | ConvertTo-Html -Fragment

#Disk Report

$disk = Get-WmiObject -Class Win32_LogicalDisk -Filter DriveType=3 -ComputerName $servers |

Select DeviceID , @{Name="Size(GB)";Expression={"{0:N1}" -f($_.size/1gb)}}, @{Name="Free space(GB)";Expression={"{0:N1}" -f($_.freespace/1gb)}} | ConvertTo-Html -Fragment#Server UpTime

$FarmUpTime = Get-WmiObject -class Win32_OperatingSystem -ComputerName $servers |

Select-Object __SERVER,@{label='LastRestart';expression={$_.ConvertToDateTime($_.LastBootUpTime)}} | ConvertTo-Html -Fragment#Core SharePoint services

#Borrowed and modified from https://gallery.technet.microsoft.com/SharePoint-Server-Check-V10-f5e205b4 //JS

$coreservices = Get-WmiObject -Class Win32_Service -ComputerName $servers | ? {($_.Name -eq "AppFabricCachingService") -or ($_.Name -eq "c2wts") -or ($_.Name -eq "FIMService") `

-or ($_.Name -eq "FIMSynchronizationService") -or ($_.Name -eq "Service Bus Gateway") -or ($_.Name -eq "Service Bus Message Broker") -or ($_.Name -eq "SPAdminV4") `

-or ($_.Name -eq "SPSearchHostController") -or ($_.Name -eq "OSearch15") -or ($_.Name -eq "SPTimerV4") -or ($_.Name -eq "SPTraceV4") -or ($_.Name -eq "SPUserCodeV4") `

-or ($_.Name -eq "SPWriterV4") -or ($_.Name -eq "FabricHostSvc") -or ($_.Name -eq "WorkflowServiceBackend") -or ($_.Name -eq "W3SVC") -or ($_.Name -eq "NetPipeActivator")} `

| Select-Object DisplayName, StartName, StartMode, State, Status| ConvertTo-Html -Fragment#====================#

# Application Report #

#====================##SharePoint Farm Status

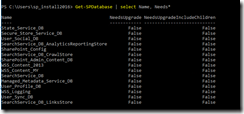

$SPFarm = Get-SPFarm | select Name , NeedsUpgrade , Status , BuildVersion |ConvertTo-Html –Fragment#Web Application Pool Status

#Source: http://stevemannspath.blogspot.com/2013/06/sharepoint-2013-listing-out-existing.html

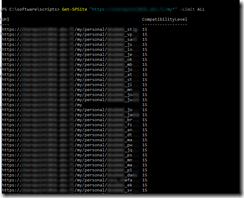

$WAppPool = [Microsoft.SharePoint.Administration.SPWebService]::ContentService.ApplicationPools | select Name, Username,Status | ConvertTo-Html -Fragment#Web Application Status Check

$WebApplication = Get-SPWebApplication | Select Name , Url , ContentDatabases , NeedsUpgrade , Status | ConvertTo-Html -Fragment#IIS Total number of current connections

$IIS1 = Get-Counter -ComputerName $servers -Counter "\web service(_total)\Current Connections" |

Select Timestamp , Readings | ConvertTo-Html -Fragment#Service Application Pool Status

#Returns the specified Internet Information Services (IIS) application pool. NOTE, these are the SERVICE app pools, NOT WEB app pools.

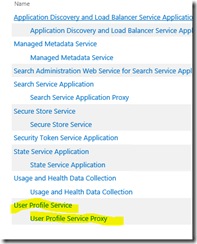

$SAppPool = Get-SPServiceApplicationPool | Select Name , ProcessAccountName , status | ConvertTo-Html -Fragment#Service Application Status

$ServiceAppplication = Get-SPServiceApplication | Select DisplayName , ApplicationVersion , Status , NeedsUpgrade | Convertto-Html -Fragment#Service Application Proxy Status

$ApplicationProxy = Get-SPServiceApplicationProxy | Select TypeName , Status , NeedsUpgrade | ConvertTo-Html -Fragment#Search Administration Component Status

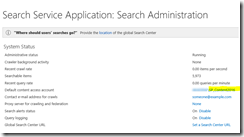

$SPSearchAdminComponent = Get-SPEnterpriseSearchAdministrationComponent -SearchApplication "Search Service Application" | Select Servername , Initialized | ConvertTo-Html -Fragment#Search Scope Status

$SearchScope = Get-SPEnterpriseSearchQueryScope -SearchApplication "Search Service Application" | Select Name , Count | ConvertTo-Html -Fragment# WARNING: The command 'Get-SPEnterpriseSearchQueryScope' is obsolete. Search Scopes are obsolete, use Result Sources instead.

# The below code is maybe not an exact alternative to search scope, but at least something...

# Source: https://docs.microsoft.com/en-us/powershell/module/sharepoint-server/get-spenterprisesearchresultsource?view=sharepoint-ps

# Still using Get-SPEnterpriseSearchQueryScope though, as the output from Get-SPEnterpriseSearchServiceApplication/Get-SPEnterpriseSearchResultSource is not exactly what I want.#$ssa = Get-SPEnterpriseSearchServiceApplication -Identity 'Search Service Application'

#$owner = Get-SPEnterpriseSearchOwner -Level SSA#$SearchScope = Get-SPEnterpriseSearchResultSource -SearchApplication $ssa -Owner $owner | select Name, Description, Active | ConvertTo-Html -Fragment

#Search Query Topology Status

#Using Get-SPEnterpriseSearchTopology instead of Get-SPEnterpriseSearchQueryTopology

$SPQueryTopology = Get-SPEnterpriseSearchTopology -SearchApplication "Search Service Application" | select TopologyId , State | ConvertTo-Html -Fragment# $SPQueryTopology = Get-SPEnterpriseSearchQueryTopology -SearchApplication "Search Service Application" | select ID, State | ConvertTo-Html -Fragment

# The term 'Get-SPEnterpriseSearchQueryTopology' is not recognized as the name of a cmdlet, function, script file, or operable program.

# ID not in use, using TopologyId instead (with Get-SPEnterpriseSearchTopology).#Content Sources Status with Crawl Log Counts

$SPContentSource = Get-SPEnterpriseSearchCrawlContentSource -SearchApplication "Search Service Application" |

Select Name , SuccessCount , CrawlStatus, LevelHighErrorCount, ErrorCount, DeleteCount, WarningCount | ConvertTo-Html -Fragment#Crawl Component Status

#$SPCrawlComponent = Get-SPEnterpriseSearchCrawlComponent -SearchApplication "Search Service Application" -CrawlTopology "0e49b522-4b7b-44d5-bc77-99b53e1c9f03"| Select ServerName , State , DesiredState , IndexLocation | ConvertTo-Html -Fragment

$SPCrawlComponent = Get-SPEnterpriseSearchStatus -SearchApplication "Search Service Application" | Select Name , State | ConvertTo-Html -Fragment

# OLD COMMAND: Get-SPEnterpriseSearchServiceApplication | Get-SPEnterpriseSearchTopology

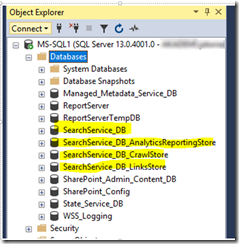

# Not entirely same results as for SharePoint 2010, but it'll do.#Content Database Status

$DBCheck = Get-SPDatabase | Select Name , Status , NeedsUpgrade , @{Name="size(GB)";Expression={"{0:N1}" -f($_.Disksizerequired/1gb)}} | ConvertTo-Html -Fragment#Failed Timer Jobs, Last 7 days

$f = Get-SPFarm

$ts = $f.TimerService

$jobs = $ts.JobHistoryEntries | ?{$_.Status -eq "Failed" -and $_.StartTime -gt ((get-date).AddDays(-7))}

#$failedJobs=$jobs.Count //.Count seems to do nothing. This one does something on the other hand:

$failedjobs = $jobs | select StartTime,JobDefinitionTitle,Status,ErrorMessage | ConvertTo-Html -Fragment#SharePoint Solution Status

#$SPSolutions = Get-SPSolution | Select Name , Deployed , Status | ConvertTo-Html -Fragment#=======================#

# Table for html output #

#=======================#$legendStat="The checks are considered 'Green' in terms of RAG Status when status values are one of the following: <br>

<b>OK, Online, Ready, Idle, CrawlStarting, Active, True</b>"ConvertTo-Html -Body "

<font color = brown><H2><B><br>Server Report:</B></H2></font>

<font color = blue><H4><B>Memory Usage</B></H4></font>$Memory

<font color = blue><H4><B>Disk Usage</B></H4></font>$disk

<font color = blue><H4><B>Uptime</B></H4></font>$Farmuptime

<font color = blue><H4><B>SharePoint Server Core Services Status</B></H4></font>$coreservices<font color = brown><H2><B><br>Application Report:</B></H2></font>

<font color = blue><H4><B>Note :</B></H4></font>$legendStat

<font color = blue><H4><B>Farm Status</B></H4></font>$SPFarm

<font color = blue><H4><B>Web Application POOL Status</B></H4>$WAppPool

<font color = blue><H4><B>Web Application Status</B></H4>$WebApplication

<font color = blue><H4><B>IIS: Current number of active connections</B></H4></font>$IIS1

<font color = blue><H4><B>Service Application POOL Status</B></H4>$SAppPool

<font color = blue><H4><B>Service Application Status</B></H4>$ServiceAppplication

<font color = blue><H4><B>Service Application Proxy Status</B></H4>$ApplicationProxy

<font color = blue><H4><B>Search Administration Component Status</B></H4></font>$SPSearchAdminComponent

<font color = blue><H4><B>Search Scope Status</B></H4></font>$SearchScope

<font color = blue><H4><B>Search Query Topology Status</B></H4></font>$SPQueryTopology

<font color = blue><H4><B>Content Sources Status with Crawl Log Counts</B></H4></font>$SPContentSource

<font color = blue><H4><B>Crawl Component Status</B></H4>$SPCrawlComponent

<font color = blue><H4><B>Content Database Status</B></H4></font>$DBCheck

<font color = blue><H4><B>Failed Timer Jobs, Last 7 days</B></H4></font>$failedJobs

" -Title "SharePoint Farm Health Check Report" -head $head | Out-File C:\software\scripts\ServerAndApplicationReport.html#Document Inventory Module

#Document Inventory Report

[void][System.Reflection.Assembly]::LoadWithPartialName("Microsoft.SharePoint")

function Get-DocumentLibraryInventory([string]$siteUrl) {

$site = New-Object Microsoft.SharePoint.SPSite $siteUrl

foreach ($web in $site.AllWebs) {

foreach ($list in $web.Lists) {

if ($list.BaseType -ne "DocumentLibrary") {continue}

foreach ($item in $list.Items) {

$data = @{

"Site" = $site.Url

"Web" = $web.Url

"list" = $list.Title

"Item ID" = $item.ID

"Item URL" = $item.Url

"Item Title" = $item.Title

"Item Created" = $item["Created"]

"Item Modified" = $item["Modified"]

"File Size" = $item.File.Length/1KB

}

New-Object PSObject -Property $data

}

}

$web.Dispose();

}

$site.Dispose()

}#=================================#

# Send email to SharePoint Admins #

#=================================#Function logstamp {

$now=get-Date

$yr=$now.Year.ToString()

$mo=$now.Month.ToString()

$dy=$now.Day.ToString()

$hr=$now.Hour.ToString()

$mi=$now.Minute.ToString()

if ($mo.length -lt 2) {

$mo="0"+$mo #pad single digit months with leading zero

}

if ($dy.length -lt 2) {

$dy="0"+$dy #pad single digit day with leading zero

}

if ($hr.length -lt 2) {

$hr="0"+$hr #pad single digit hour with leading zero

}

if ($mi.length -lt 2) {

$mi="0"+$mi #pad single digit minute with leading zero

}

echo $dy-$mo-$yr

}

$mailDate=logstamp$To = "xxxx@xxxx.fi"

$Cc = "xxxxx.xxxxx@xxxx.fi"

$From = "xxxxxxxxxxxx@xxxx.fi"

$SMTP = "xxxx.xxxx.fi"$Subject = "[SharePoint] Server and Application Report - $mailDate"

$Report = Get-Content C:\software\scripts\ServerAndApplicationReport.htmlSend-MailMessage -To $To -SmtpServer $SMTP -From $From -Subject $Subject -BodyAsHtml "$Report" -Cc $Cc

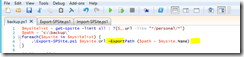

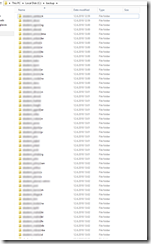

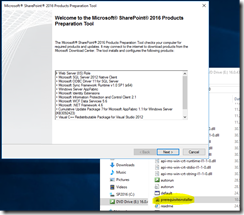

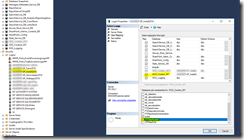

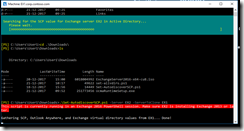

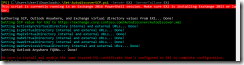

The script itself is located in c:\software\scripts on the server, and is ran from task scheduler once a week. It produces the report shown in the first screenshot.

The weekly run-command is available in the the screenshot below.

Side note:

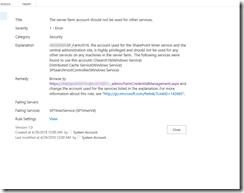

You can also get SharePoint’s own Health Analyzer Alerts from PowerShell (should you have the need to):

http://itgroove.net/mccalec/2015/03/13/getting-current-health-analyzer-alerts-with-powershell/

And that’s all there is to it – pretty short and effective post this time around 🙂 Enjoy!